(FULL UP) Crowd-driven Music: Interactive and Generative Approaches using Machine Vision and Manhattan

19:00 – 21:00 (GMT+1)

Hosted by:

Chris Nash (Lead Organiser), UWE Bristol

Corey Ford (Technical Support), UWE Bristol

Maximum number of participants: 25

Abstract

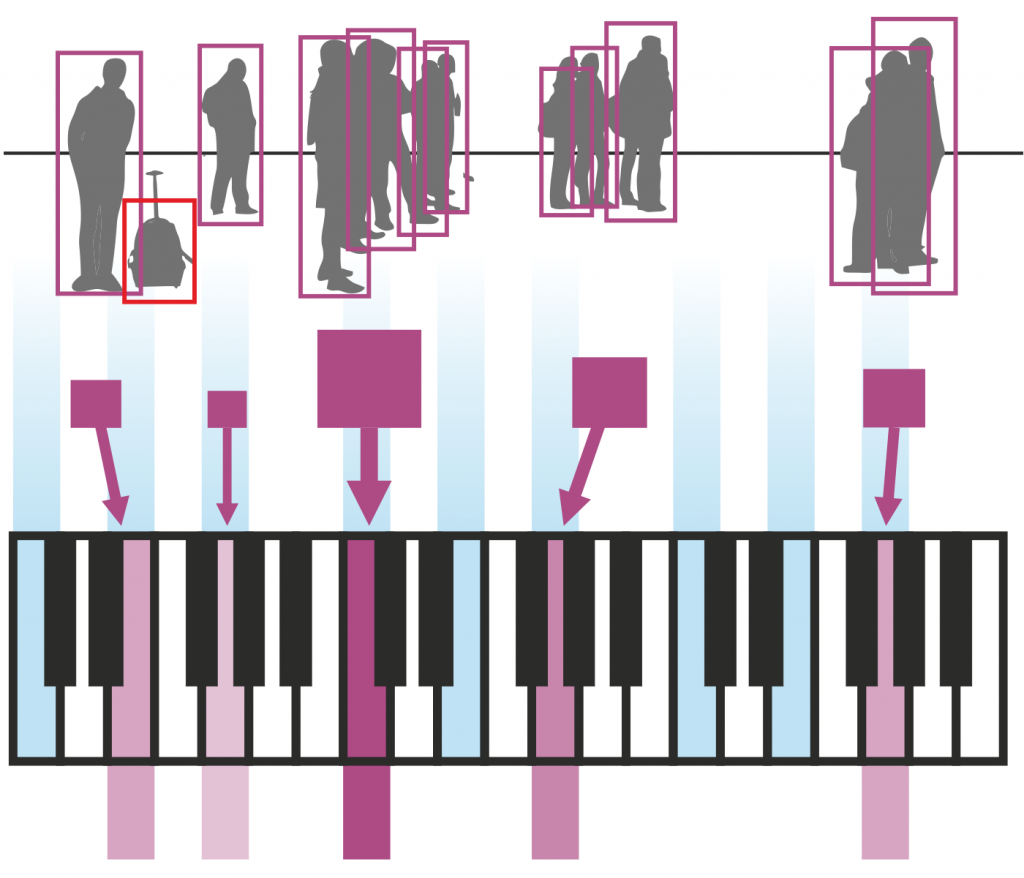

This workshop offers a practical opportunity to code mappings from chaotic crowds to structured music using Manhattan (http://nash.audio/manhattan), an end-user programming environment and music editor designed to bridge composition and coding practices, coupled with live input from crowd footage, analysed using machine vision. Various techniques and examples (notably from BBC Music Day) are explored over two 1-hour sessions, respectively offering a practical introduction to crowd-driven music, followed by delegates’ development of original works. The workshop is accessible to anyone with a Mac/Windows computer, of any aesthetic and ability. An optional introduction to basic coding practices in Manhattan is additional offered.

Schedule:

- (optional) Manhattan Primer (1-hour) *

- Introduction to Crowd-driven Music (1-hour)

- Crowd-driven Music Masterclass (1-hour)

URLs:

Crowd-driven Music Video Examples (mp4) – http://nash.audio/manhattan/nime2020/

Manhattan Software (for Mac and Windows) – http://nash.audio/manhattan